Introduction

These are a few posts that came up as a result of my work in one consultancy project helping a team that worked on implementation of a SharePoint 2010 based system. My involvement into the project was not too long, only a month, and my tasks were mostly bringing break-through solutions for the most peculiar requirements or the most intricate integration points. Some of those solutions will be described in these posts.

Part 1 – Business Workflows

Functional Requirements and Technical Solution

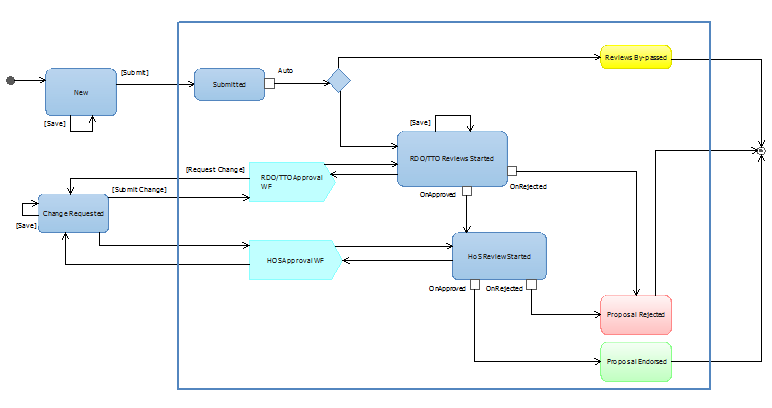

The system includes a few workflows automating some business processes. Basically those workflows were a combination of approval, review, change request (CR) and some other types of activities. On the figure below a state machine for fragment of one of those workflows is shown just to give a general idea.

After analyzing functional and technical requirements it looked like SharePoint Designer workflows would be the best solution, taking into consideration the fact that the system should be supportable by people who do not have yet much experience with SharePoint development.

So the solution was to create a reusable workflow in SPD associated with a content type, which was based on Document Set (even though using document sets brought some extra complexity into the whole thing, in this context it does not make much difference from using just a folder in document library).

So nothing exciting so far apart form the fact that the functional requirements included some “non-standard” pieces and out-of-the-box workflows themselves are full of “peculiarities”.

Specific Requirement – Closing CR Tasks automatically on Resubmit

A few business insights just to describe the problem briefly: the business process was built around processing a Proposal, which in fact is a long workflow from submission to contract stage including multiple reviews and approvals. The Proposal itself appears to the user as a big InfoPath form with lots of data and logic. But the Proposal comes with a bunch of documents e.g. different types of contracts etc. So technically the whole Proposal looked like a document set of custom content type containing all needed attributes. This document included master proposal form and other documents of specific types (defined as content types as well).

The first stage of the whole process is depicted on the figure with the state machine above. SPD reusable workflow suits ideally for implementation of that workflow logic. Approval, delegation, and request for changes could be perfectly implemented using ‘Start Approval Process’ activity. The challenge here was starting approval process for two different departments in parallel. There are several options to achieve the goal but some of them just do not work because of unexpected peculiarities in SPD out-of-the-box workflows. Playing with those options I even learned how to kill the whole SharePoint farm with one little out-of-the-box workflow! (It might be a subject for another blog). But finally the task was resolved with standard means.

So everything works fine except one thing. The requirement was that change request tasks issued during approval activities should be closed automatically when user hits submit button in the InfoPath form. So instead of making changes in InfoPath form, saving it and then going to the CR task in the SharePoint tasks list and closing it as supposed by out-of-the-box, client wanted a shortcut. Just to mention that closing CR task is the only trigger to continue sequential workflow; otherwise the workflow is stuck. After thorough investigation I realized that there is no out-of-the-box way to implement the requirement. So it looked like 95% of smooth and easy out-of-the-box implementation will be wasted because of 5% of peculiar requirements that cannot be done with “black-box” limitations. All other alternative “custom” solutions would be too expensive in that situation.

But the solution for those 5% was found with a small custom piece:

- I added another column to the content type for Proposal Document Set (the content type associated with workflow). The purpose of this column was to store information about open CR tasks (a coma-separated list of tasks IDs)

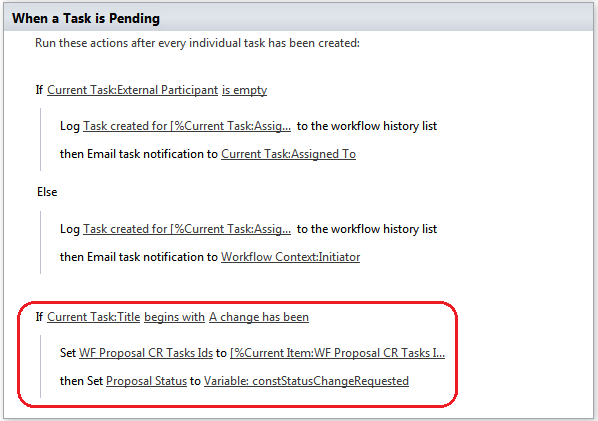

- Then in SDP workflow I managed to maintain that list of IDs each time when CR task was issued:

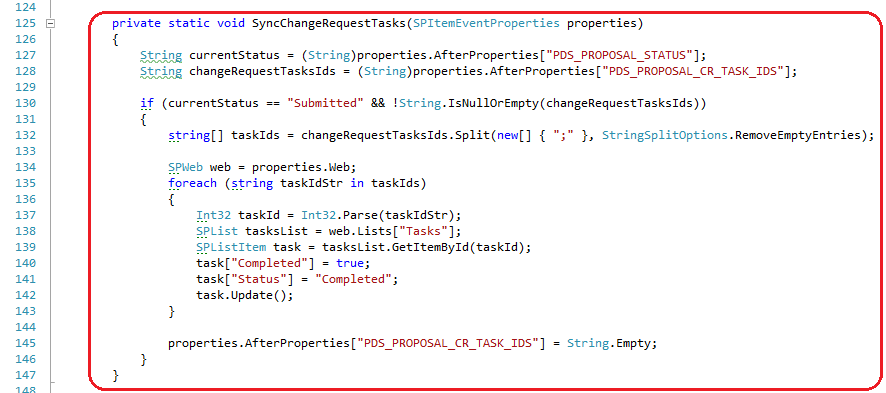

- And the last piece is custom code in Even Receiver for the content type. This code is waiting for resubmission and then it closes tasks programmatically. Good thing was that even if I close tasks externally it is still a trigger for out-of-the-box workflow to continue.

Well, it is not the most elegant solution but it worked!

Issues and Limitations of SPD Reusable Workflows

SPD reusable workflows are not that reusable as its name suggests.

First of all it is limitations using lookups in activities. Technically when building a reusable workflow I can use lists connected as lookups to my master content type associated with the workflow. But if I want to prepare a deployment package, which in case of reusable workflows can be taken with “Save as Template” functionality, SPD says that template cannot be saved for workflows using lookups. Well, it can be explained that lookups in workflow rely on GUIDs of the connected lists, but it would be handy to have an option to store lookups relying on list names. To manage this limitation I had to create text columns duplicating values of lookup columns to use them in workflow, and event receivers synchronizing lookup values to text values. Again it is not the best solution because duplication is almost never good.

Another strange thing is that when I deploy my reusable workflow (“saved as template” from development environment) to new location, the link to content type is lost (even though I deployed the content type before deploying the workflow). Explanation about GUIDs can be used here as well but what really strange is – there is no direct way to restore association to content type after deployment. The only way I found to restore this association is “Copy & Modify” functionality. When you copy faulty workflow you can set link to content type in the copy. There were a few smaller issues like after coping workflows the names of approval activities were lost.

Workflow History – fixing “60 days” problem

One of the requirements was to have workflow history to audit tracks of business processes. SharePoint gives a possibility to review workflow history related to a list item. But by default the workflow history associated with an item will disappear in 60 days. In fact workflow history records are in the system and still stored in the Workflow History list (or any other list specified at workflow creation), but associations between list item and history records will be cleaned up. There is a way to change default settings for 60 days to increase the period or keep associations forever, but it is not recommended way to go because this can cause performance issues.

The easiest way to get workflow history I found is this:

- First of all, Workflow History list has enough information (columns) to identify workflow type, workflow instance and item on which the workflow was run plus it has all information about what happened in that step (description, outcome, date, duration, user). So it is possible to create a custom view on that list displaying workflow history in the way you want (sorting, grouping, columns, etc.). It can be a view for specific workflow type or aggregated view for several workflows.

- Now you need to provide a link to that view form the item on which workflow was run. The easiest way to do this is to add another column to the list which will contain a URL to the view mentioned in the first step. Obviously you need to filter workflow history records by item owning the workflow instance. This can be achieved with query parameters something like this /Lists/WorkflowHistory/CustomHistoryView.aspx?FilterField1=Item&FilterValue1={PrimaryItemId}.

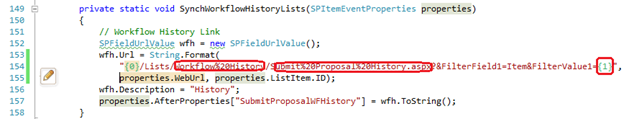

- And another synchronization piece of code in list item event receivers that sets URL to the workflow history link column and specifies item context (item id):